Blueprint Chapter - Ollama

Obtain Ollama

You can obtain the installation package for local installation through the Ollama official website: ollama.com

You can use Ollama through the Ollama API interface provided by others.

Download the model using Ollama locally.

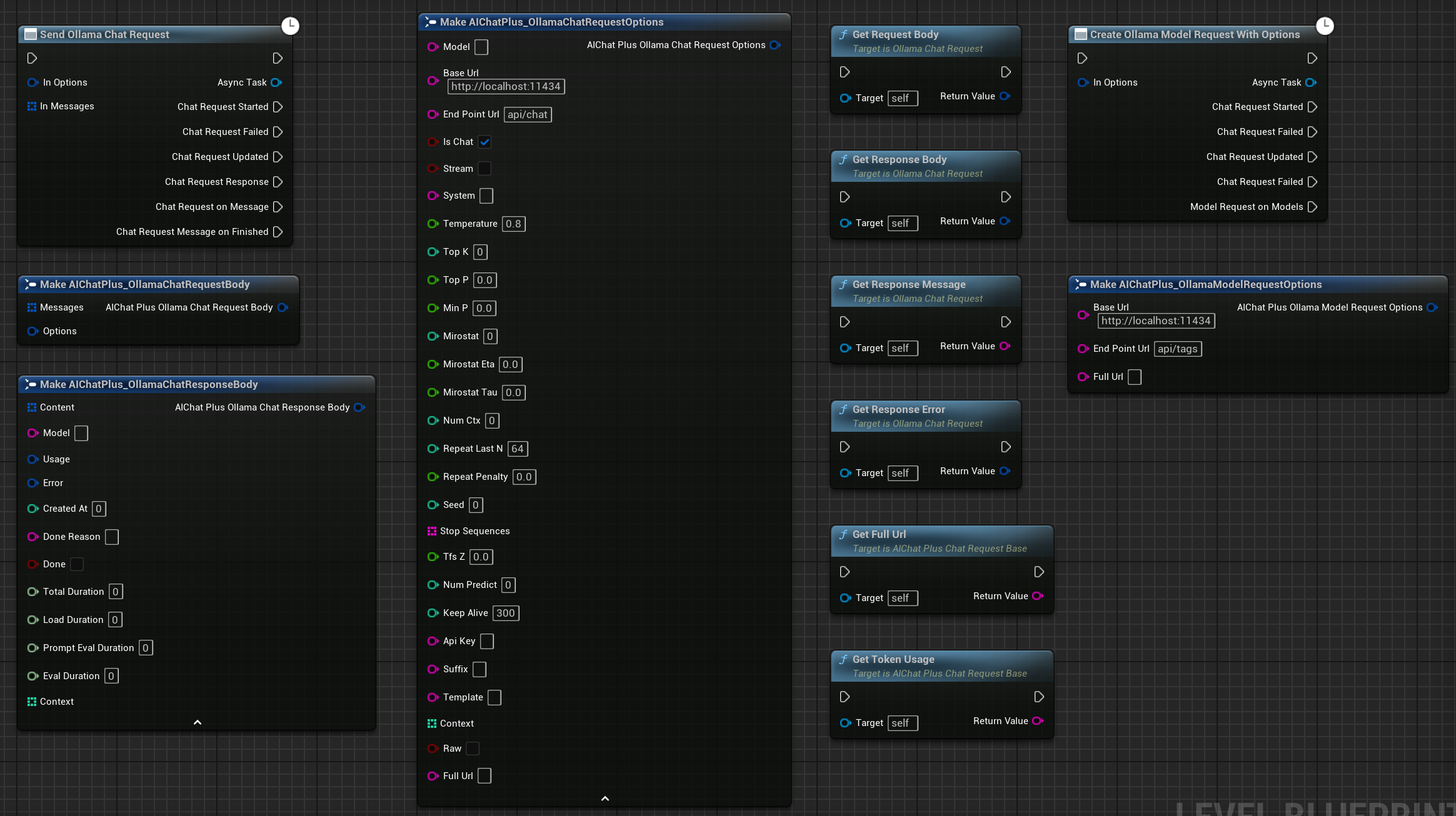

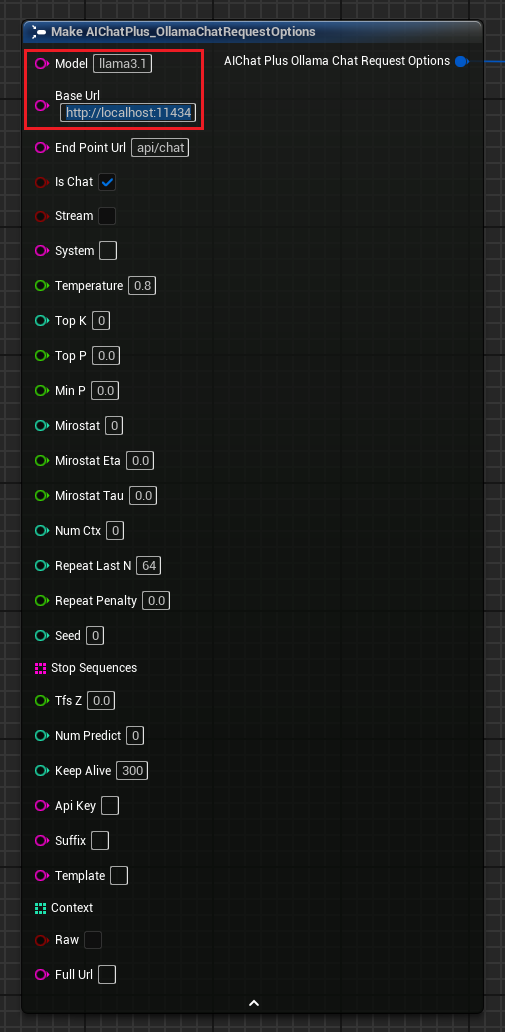

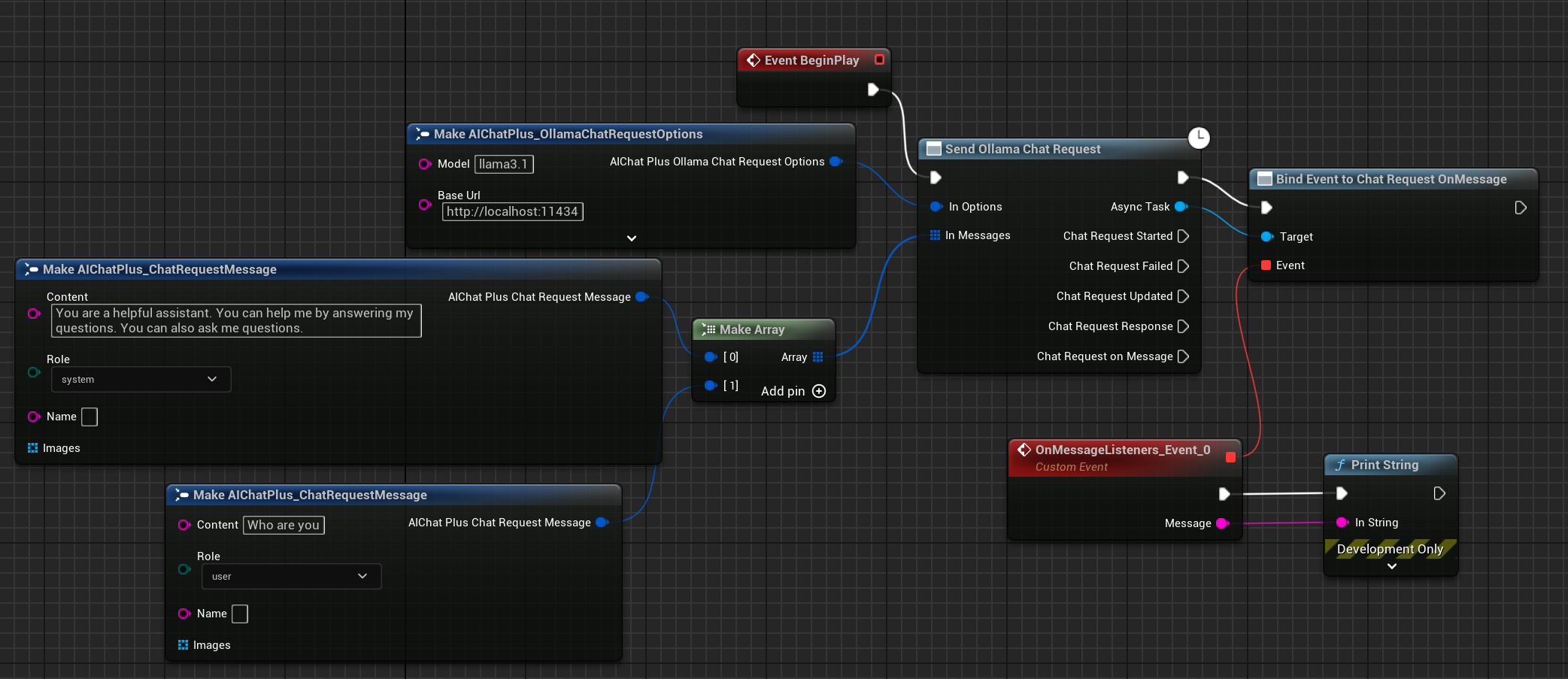

Text chat

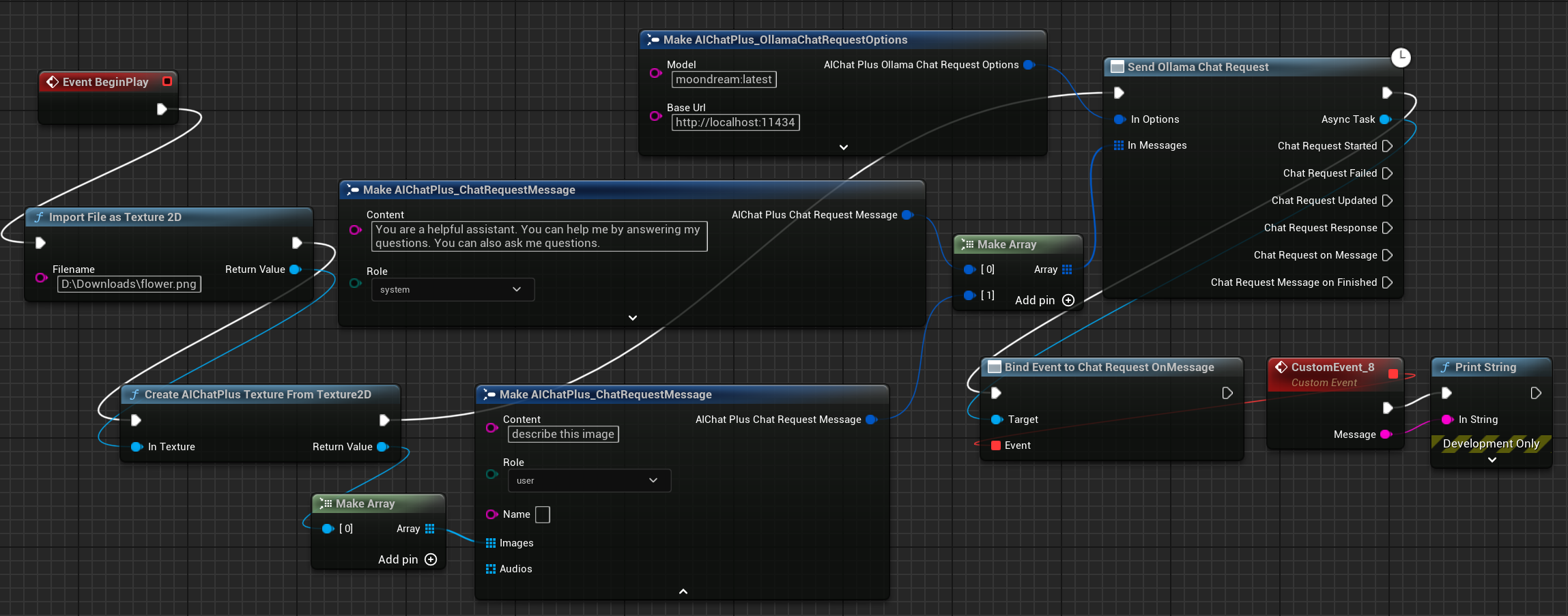

Create a "Ollama Options" node, set the parameters "Model" and "Base Url". If Ollama is running locally, the "Base Url" is usually "http://localhost:11434".

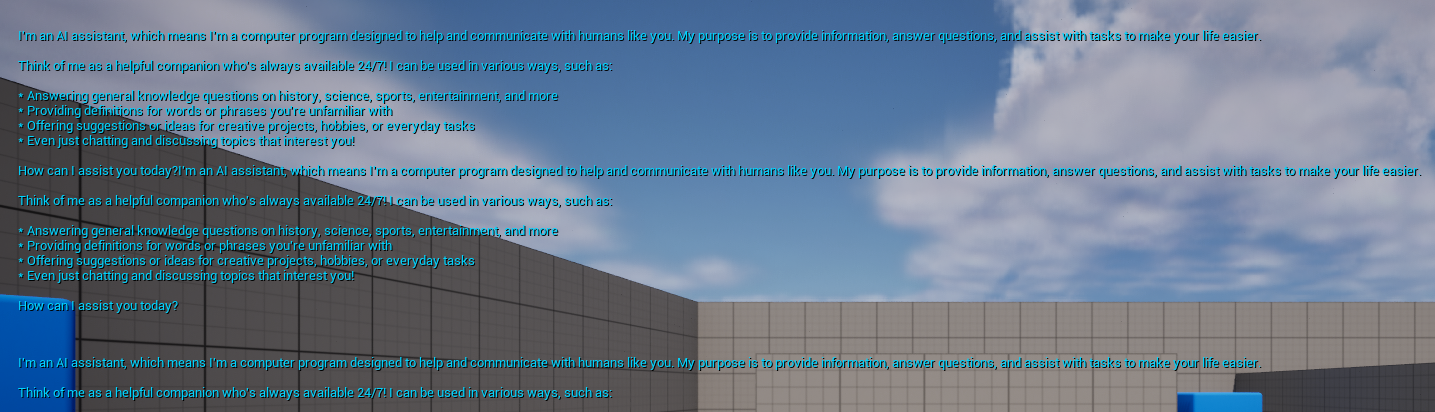

Connect the "Ollama Request" node with the relevant "Messages" node, click on run, and you will be able to see the chat messages returned by Ollama printed on the screen. Refer to the image for details.

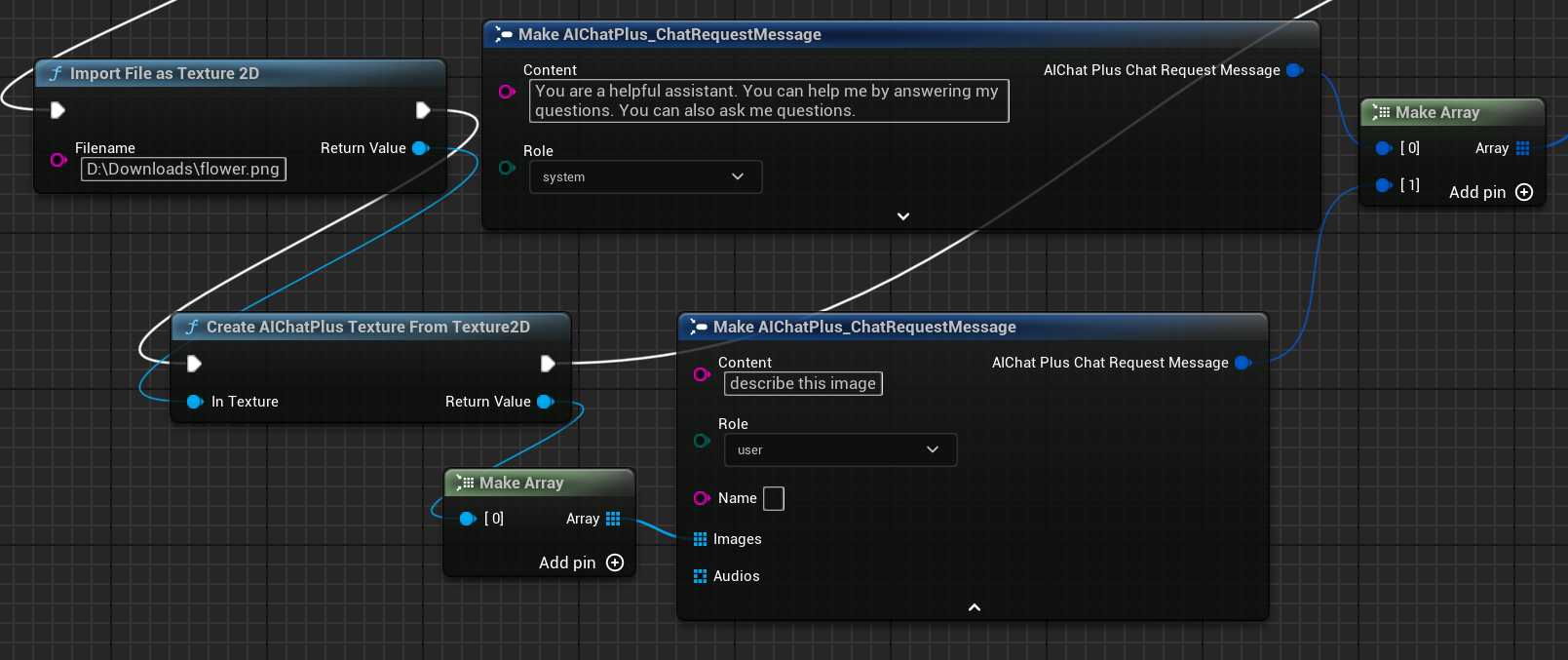

Generate Text from Image

Ollama also supports the llava library, providing the capability of Vision.

First obtain the Multimodal model file:

Set the "Options" node, and set "Model" to moondream:latest.

Read the image flower.png and set the message.

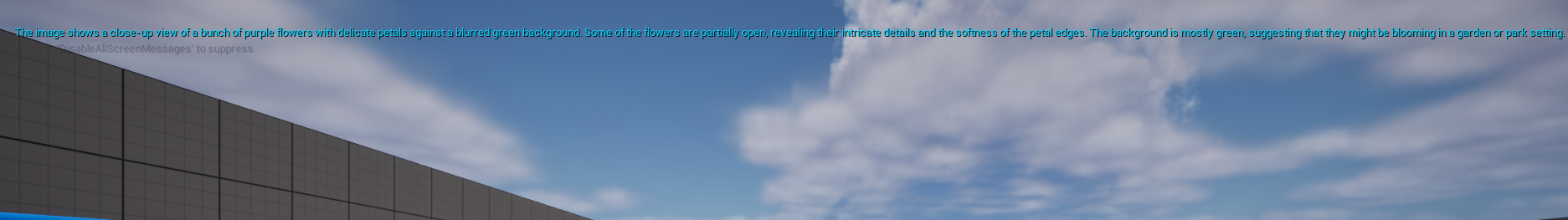

Connect to the "Ollama Request" node, click run, and you will see the chat messages returned by Ollama printed on the screen.

Original: https://wiki.disenone.site/en

This post is protected by CC BY-NC-SA 4.0 agreement, should be reproduced with attribution.

Visitors. Total Visits. Page Visits.

This post was translated using ChatGPT. Please provide feedbackPoint out any omissions.